Free Amazon Product and Bestseller Data

By Christian Prokopp on 2024-04-11

Today, we release a massive dataset for non-commercial use, i.e. research or personal projects. The dataset covers Amazon product data for all of 2023. The drop contains nearly 1.8 billion rows (1,791,511,444) in more than 200 GB of ZSTD compressed Parquet files. This includes bestseller and product data from Amazon.com, Amazon.co.uk, and Amazon.de. Hopefully, this addresses many of the queries we have received from hobbyists and researchers over the last few years.

You must read and accept the license before downloading and using the data. Download the data from this shared folder. The data is partitioned in folders by request_country=*/year=2023, i.e. countries are US, GB, DE and the year is always 2023. Each leaf folder contains multiple ZSTD compressed Parquet files. This is a limited data dump, reducing the size and complexity. Still, be aware that most laptop and desktop computers will fail or at least struggle to process all of the data.

Access the Data with Athena

The data was exported using AWS Athena and S3. Hence, a convenient way to explore the data is to upload it to an S3 bucket, create a table against it, run a repair command, and query it with SQL. Of course, ensure that you use the same folder structure and give appropriate permissions, and it will result in AWS charges.

CREATE EXTERNAL TABLE IF NOT EXISTS <YOUR_TABLE_NAME> (

name string,

main_sku string,

variant_skus array<string>,

brand string,

brand_url string,

image_url string,

outofstock boolean,

sku string,

origin string,

price decimal(10,2),

price_range_start decimal(10,2),

price_range_end decimal(10,2),

price_source string,

price_currency string,

http_code smallint,

review_avg decimal(10,2),

review_count int,

url string,

html_len int,

skus array<string>,

ranks map<string,int>,

breadcrumbs array<string>,

scraper string,

extra_data map<string,string>,

crawl_id string,

request_ts timestamp,

request_ip string,

request_city string

)

PARTITIONED BY (request_country string, year int)

STORED AS parquet

LOCATION 's3://<YOUR_BUCKET>';

MSCK REPAIR TABLE <YOUR_TABLE_NAME>;

The beauty of Athena is its ability to query and process data serverless without the limitations of your local computer.

Access the Data with Python

You can also process the data locally, e.g. if you want to explore a single file or parse many to extract a subset of data. But it will be slow, and you may run out of memory. Here is an example of how to load the data to give you a starting point. You will need pandas and pyarrow to execute it.

# This script is for illustrative purposes only. It is provided as-is and without any warranty. CC BY 4.0 license, please attribute and link to https://www.bolddata.org

import argparse

import glob

import time

from pathlib import Path

import pandas as pd

import pyarrow.parquet as pq

from pandas import DataFrame

def find_files(data_dir: Path) -> DataFrame:

"""

Find all parquet files in the given directory and return a DataFrame with the file information.

Returns: DataFrame: Containing country, year, file path, and row count. """ files = glob.glob(f'request_country=*/year=*/*', root_dir=data_dir, recursive=True)

files_metadata = []

for file in files:

file_path = data_dir.joinpath(file)

if file_path.name.startswith('.') or file_path.stat().st_size == 0 or not file_path.is_file():

continue

country = file_path.parent.parent.stem.split('=')[1]

year = int(file_path.parent.stem.split('=')[1])

row_count = pq.read_metadata(file_path).num_rows

files_metadata.append([country, year, file_path, row_count])

df = pd.DataFrame(files_metadata, columns=['country', 'year', 'file', 'row_count']).astype(

{'country': 'str', 'year': 'int', 'file': 'str', 'row_count': 'int'})

return df

def test_load_file(file: Path) -> None:

start_time = time.time()

print(f'Test loading "{file}". Reading file to dataframe...(this may take a moment)')

df = pd.read_parquet(file)

print(df.head())

elapsed_time = time.time() - start_time

print(f"Loading took {elapsed_time:.2f}s")

def get_dir_from_args() -> Path:

parser = argparse.ArgumentParser(description='This script processes parquet files in a given directory.')

parser.add_argument('--data_dir', default='./data', help='Directory containing the parquet files.')

args = parser.parse_args()

print('Use --data_dir to specify data directory (default: ./data).\n'

'Expected folder structure is <data_dir>/request_country=*/year=*/*')

if not Path(args.data_dir).is_dir():

print(f'Error: "{args.data_dir}" is not a directory.')

exit(1)

return Path(args.data_dir).resolve()

def main():

full_dir = get_dir_from_args()

print(f'Processing parquet files in: "{full_dir}"\n')

df_files = find_files(full_dir)

print(df_files)

# Aggregated by country and year

print(df_files.groupby(['country', 'year'])['row_count'].sum().apply(lambda x: f'{x:,}').reset_index().rename(columns={0: 'total_rows'}).to_string(index=False))

print(f'\nTotal {len(df_files)} files and {df_files["row_count"].sum():,} rows.\n')

test_load_file(df_files.iloc[0]['file'])

if __name__ == '__main__':

main()

The script does two main actions:

- Find all the parquet files in the data directory and read the metadata. You should get 210 files and 1,791,511,444 rows.

- Load one file to test its data.

A top-of-the-line laptop takes ~1s and ~23s for the steps. If you modify the script or have other helpful code related to this data, send an email or message, and we might share it here.

We do have one request. Let us know how you use the data, credit Bold Data Ltd, and provide a link to this website in your work. Thank you.

Christian Prokopp, PhD, is an experienced data and AI advisor and founder who has worked with Cloud Computing, Data and AI for decades, from hands-on engineering in startups to senior executive positions in global corporations. You can contact him at christian@bolddata.biz for inquiries.

Related Posts

How many words are 128k tokens?

2024-04-12

128k tokens are 96k words in English for ChatGPT 3.5 and 4. The ratio is estimated to be 0.75 words per token. However, the answer is not straightf...

Llamar.ai: A deep dive into the (in)feasibility of RAG with LLMs

2023-09-27

Over four months, I created a working retrieval-augmented generation (RAG) product prototype for a sizeable potential customer using a Large-Langua...

A Guide to the Delta Lake Transaction Log

2023-02-14

Discover the power of the Delta Lake transaction log - ensuring Data reliability and consistency.

Python TDD with ChatGPT

2023-02-02

Programming with ChatGPT using an iterative approach is difficult, as I have demonstrated previously. Maybe ChatGPT can benefit from Test-driven de...

Delta Lake vs Data Lake

2022-11-02

Should you switch your Data Lake to a Delta Lake? At first glance, Delta Lakes offer benefits and features like ACID transactions. But at what cost?

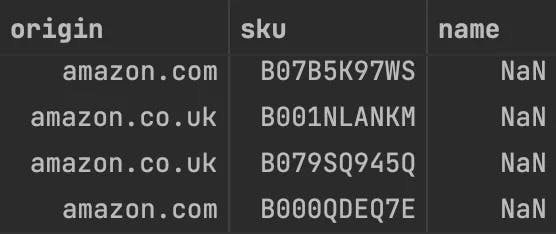

Bad Data: Nameless Amazon bestsellers

2022-05-03

Many Amazon marketplace customers know that its huge product catalogue has data quality issues. However, they might expect its top sellers, which t...